For part 3, we evaluate the history of the third kind of evidence in cognitive neuroscience:

A normal subject is asked to do a task. While they are doing the task, a scientist observes their brain in action.

For many readers, this will sound like fMRI, where a person lies down in a huge machine, is instructed to think about something, or view something, and then, VOILA certain parts of their brain light up. But in fact, this kind of logic has been used for much longer than fMRI. Before I launch into a description of fMRI, let's follow the chain of evidence that gets us there. The neuron is the basic unit of the brain, across animals. Some animals have brain systems that are quite similar to human brain systems.

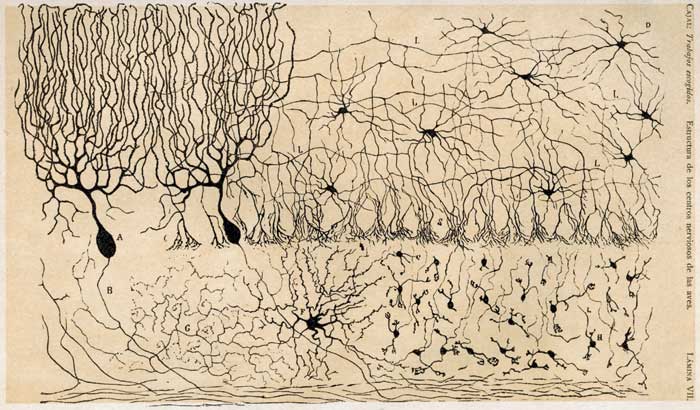

The neuron is the basic unit of the brain. This had to be discovered, and was once the subject of debate. While Schwann and Schleiden proposed that the cell was the basic functional unit of life in 1838, it strangely took some time for this to be applied to the brain. Advances in microscopy, and lenses allowed Purkinje to find the first neurons in 1837... Wait, what's that? Well, even though in retrospect we see that Purkinje discovered the first of many nerve cells, at the time, this was not enough evidence to counter the camp of scientists who thought the brain was fibrous tissue and not cells. Later physiologists, like Otto Dieters, whose work published after his death in 1865 documented other neurons, and Camilo Golgi, who discovered a new method of staining in 1873 and showed the world incredibly clear pictures of the brain.

But Santiago Ramon y Cajal was responsible for breaking through the doubters, by using Golgi's staining methods to show clearly the neurons in the cerebral cortex, in 1888. He shared the Nobel Prize with Golgi in 1906. Except that Golgi still though that the brain was a bunch of undifferentiated tissue, and said so in his half of the lecture, while Ramon y Cajal used his half to try to refute Golgi and show that the brain was made of individual neurons. By the way, the link above is from the blog Neurophilosophy, and is a great synthesis of this story of the discovery of the neuron.

The brains that Golgi and Ramon y Cajal were studying were animal brains, but as they discovered the fundamental unit of the neuron, others began to discover how these neurons worked. In 1921 Otto Loewi discovered that neurons transmit their messages chemically (Nobel Prize, 1936) as well as electrically.

Animals have brain systems, just like us

Why does any of this matter? Well, these discoveries (along with others, and technological development in making really small, narrow glass pipettes) allow for the possibility of recording electrical activity from a single neuron while the animal is awake or active. This allows Eric Kandel (Nobel Prize, 2000) to investigate how memory works at the cellular level, mostly by working with sea slugs (aplysia). It allows Hubel and Weisel (Nobel Prize, 1981) to investigate (using cats) how a certain pattern of light on the retina results in a certain pattern of activity in the brain. These studies are what are called single cell recordings. Basically a very small hollow-tipped needle is placed inside the brain of an animal (inside a single neuron, to record its activity) , then we ask the animal to think something, and observe what makes that particular neuron activate.

With humans, this was not possible. So human live brain scanning began with the EEG, which measured the brain's electrical activity. When a bunch of neurons fire, you can detect electricity by placing detectors on the scalp. This technology had a great advantage of being able to detect neuron's firing at the exact moment that they fired. The disadvantage was that it was not very good at telling where the neurons were firing, because it could only detect broad patterns of electricity, in general brain locations. In technical terms, we say EEG had high temporal resolution (very sensitive to time) but low spatial resolution (could not tell exactly where in the brain the activity was). But despite its lack of localization, EEG is still used today, and has given us much insight into how the brain is connected to the mind.

After EEG, PET scans were an advance, because they could tell with much more accuracy which areas of the brain were active. For PET to work, you need to be injected with radioactivity, which your blood then takes to your brain and makes it glow. When a neuron fires, it needs energy to keep firing (it is actually an electrical signal, which takes energy). It sends a message for more blood, then the blood (ooh, it glows!) gets sent to that area.

PET scans gave way to fMRI, which similarly used the blood flow as a marker, but this time, no radioactive marker. The MRI machine uses an enormous magnet to detect the magnetic fields of oxygen atoms in your blood. In fact, when your brain sends blood to a set of firing neurons, it sends too much. Most of this blood gets used. Some does not. The fMRI detects that extra blood that got sent, using the BOLD (or Blood Oxygen Level Dependent) response.

Despite the fact that fMRI has been in use for twenty years, and the basic principles remain the same, the methods of its use have changed drastically. For one example, consider the computational constraints of taking a scan of the brain. Instead of a 1.5-dimensional graph (on or off) over time for single cell recording, fMRI has full 3-dimensional data, thousands of thousands of voxels (volumetric pixels). As computing power has increased, as the power of the magnets has increased, this has yielded improvements to the techniques in fMRI, which are still imperfect, albeit convincing well beyond their actual scientific utility.

This logic, of observing a brain while the animal is in action, has been around for a long time, depending on whether you start with Galvani's frogs in 1771, or Helmholtz measuring the (non-zero speed) of neural transmission in 1852, or with Kandel's studies of the cellular basis of memory in the 1960's.

Ok, so now I am at the conclusion of my introduction, and it is probably not much better than going to Wikipedia (although after doing this, I started to edit the cognitive neuroscience Wikipedia entry). What was the point?

To return to the main point: cognitive neuroscience is not young. The connection between mind and brain has been a topic of experimental study and debate for at least 200 years. But this 200 years is not simply progress, one scientist taking another's discoveries and adding to them. Golgi and Cajal, despite making amazing contributions, disagreed on whether the brain was made of neurons, in their Nobel Prize acceptance speeches. I have not described developments in whether brain cells can change (neuroplasticity) but that was a lively topic of debate for some time too. If we look closely at the history of most sciences, we see that science progresses in fits and starts. In psychology and cognitive neuroscience, we can see how advances were tied to advances in technology (lenses, magnets, electricity) or advances in other fields (PET depends on safe radioactivity) or even tragedies (head injuries in WWI resulted in many observations). When we look at the history, we see that the history of cognitive neuroscience includes physics, chemistry, biology, and psychology. In other words, despite the apparent immaturity of knowledge at every level, there is still communication and influence. Returning to the exercise that brought all this about (evaluating evolutionary psychology), I am left agreeing with another commenter on TNC's blog, it is relatively pointless to assign maturity or youth to an entire subfield. We should instead consider individual claims, and evaluate the evidence for these claims, in the context of a broad background in related scientific knowledge.

9 comments:

Just wanted to point out something else. The strategy, if you will, of seeing what happens to patients with damage to particular parts of the brain has largely been the purview of neurologists and neurosurgeons. To overgeneralize a little, psychiatrists generally been somewhat less enamored of that approach, and often conceptualize things in terms of chemical systems.

Dopamine provides pleasure and thus plays a role in addiction, but too much of that feel good chemical (or some other problem in its circuits) and you get schizophrenia. Serotonin plays a role in mood; mucking around with it is the basis of Prozac and Zoloft, but also some anti-migraine drugs.

In the lab, mice bred to have particular genes in these and other chemical systems in the brain not working properly ("knockout mice") have been useful models for studying behavior. Figuring out how that relates to people can be a challenge, though.

Finally, something that combines several of the things I mentioned is optogenetics, which combines highly selective lesioning, genetically engineered animals, and, of all things, light, to study behavior. I had the opportunity to hear the scientist who wrote that article speak recently. As if the neuroscience isn't interesting enough, the bioengineering it takes to get it to work is amazing. This is one of those situations where both the results and the methods are seriously gee whiz.

Thanks so much for reading (and commenting).

Part of my argument (partly to continue our conversation from TNC's place) is that it is not that important to distinguish between neurologists, neurosurgeons, psychiatrists and psychologists. Pavlov was a physiologist, so was Fechner. Freud was trained as a neurologist, but left that behind. As you mention, knowledge gained from knockout mice or neurochemistry also influence our conception of the connection between mind and brain.

While I am a big fan of cognitive neuroscience (and sort of a professional, I am a cognitive psychologist), I am still in agreement with neocortex's skepticism of the reaches of cog neuro. I think the dopamine/serotonin talk that has gotten so much press is a drastic oversimplification. For example, there is some recent evidence that drugs that work in the opposite direction as SSRI's (that facilitate, rather than inhibit serotonin reuptake) actually have the same effect on mood. Similarly, the dopamine system (if we can even call it that) is massive and complicated, reaching into motor control (Parkinson's) as well as reward systems.

I remember that you mentioned back at TNC's that I interpreted some of the original comment as nihilistic, which I think is true. But I recognized that the intention was not nihilistic, but its effect was. In other words, when scientist's say to each other, "We really don't know how that much the amygdala regulates emotion" it is different from saying that to a popular audience that is eager (in this case) to characterize an entire domain of science as illegitimate. It is a hard balance to strike in teaching undergraduates neuroscience, between presenting the amazing amount of current knowledge, but also presenting the huge world of unknowns, leaving some humility. While the unknown never seems to shrink, it is important to acknowledge that the known does, in fact, grow.

The distinctions among neuroscience MDs (or DOs) are partly practical--neurosurgeons will always be out standing in their own fields, in more ways than one. With psychiatry and neurology, a divergence that occurred decades ago has translated into a whether one feels more at home on a locked psych ward versus in an ICU with a patient who is comatose.

I didn't mean to overstate the virtues of the neuropharmacologic approach, but rather to suggest that attempting to merge it with localization-based methods should happen more than it does. I torment a dopamine neuropharmacologist friend by teasing her that I can't take a field seriously when the a model of whether something ultimately gets up- or down-regulated is as easy as changing the number of intervening inhibitory synapses from even to odd.

The whole hard science/soft science battle has always struck me as pointless. A physical chemist can describe a molecule in a box with great precision. A psychologist can describe someone's mood, albeit with somewhat less precision, which isn't surprising because that's more complicated than a molecule bouncing around.

Maybe some day we'll have the mathematical and statistical tools to allow the reductionists and more holistic folks to collaborate, rather than shouting themselves hoarse across such a huge divide.

How Mice prove useless in testing killer diseases. Click www.gofastek.com for more information.

Cindy

www.gofastek.com

I’m impressed. Very informative and trustworthy blog does exactly what it sets out to do. I’ll bookmark your weblog for future use.

Pebbles

www.joeydavila.net

RU486:http://www.zzleshirts.com/p59.html

淫インモラル:http://www.zzleshirts.com/p651.html

インモラル:http://www.zzleshirts.com/p651.html

"I'm impressed. Very informative and trustworthy blog does exactly what it sets out to do. I’ll bookmark your blog for future use." Web Design Company

Great article. I spent my spare time reading some blogs. And I found yours a great and knowledgeable content. Keep it up.

Zea

www.imarksweb.org

you may have an ideal weblog right here! would you like to make some invite posts on my weblog? real money casino

Post a Comment