For part 3, we evaluate the history of the third kind of evidence in cognitive neuroscience:

A normal subject is asked to do a task. While they are doing the task, a scientist observes their brain in action.

For many readers, this will sound like fMRI, where a person lies down in a huge machine, is instructed to think about something, or view something, and then, VOILA certain parts of their brain light up. But in fact, this kind of logic has been used for much longer than fMRI. Before I launch into a description of fMRI, let's follow the chain of evidence that gets us there. The neuron is the basic unit of the brain, across animals. Some animals have brain systems that are quite similar to human brain systems.

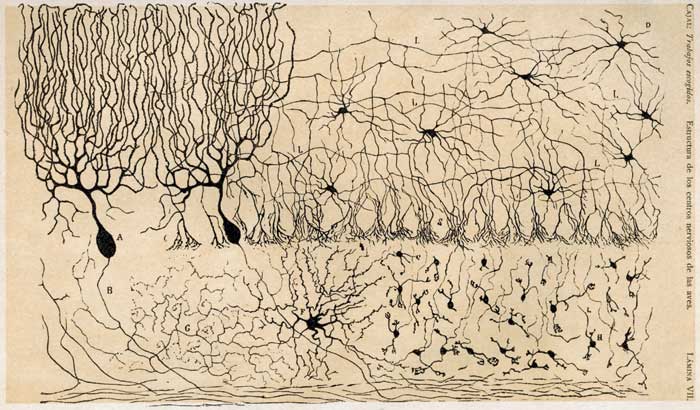

The neuron is the basic unit of the brain. This had to be discovered, and was once the subject of debate. While Schwann and Schleiden proposed that the cell was the basic functional unit of life in 1838, it strangely took some time for this to be applied to the brain. Advances in microscopy, and lenses allowed Purkinje to find the first neurons in 1837... Wait, what's that? Well, even though in retrospect we see that Purkinje discovered the first of many nerve cells, at the time, this was not enough evidence to counter the camp of scientists who thought the brain was fibrous tissue and not cells. Later physiologists, like Otto Dieters, whose work published after his death in 1865 documented other neurons, and Camilo Golgi, who discovered a new method of staining in 1873 and showed the world incredibly clear pictures of the brain.

But Santiago Ramon y Cajal was responsible for breaking through the doubters, by using Golgi's staining methods to show clearly the neurons in the cerebral cortex, in 1888. He shared the Nobel Prize with Golgi in 1906. Except that Golgi still though that the brain was a bunch of undifferentiated tissue, and said so in his half of the lecture, while Ramon y Cajal used his half to try to refute Golgi and show that the brain was made of individual neurons. By the way, the link above is from the blog Neurophilosophy, and is a great synthesis of this story of the discovery of the neuron.

The brains that Golgi and Ramon y Cajal were studying were animal brains, but as they discovered the fundamental unit of the neuron, others began to discover how these neurons worked. In 1921 Otto Loewi discovered that neurons transmit their messages chemically (Nobel Prize, 1936) as well as electrically.

Animals have brain systems, just like us

Why does any of this matter? Well, these discoveries (along with others, and technological development in making really small, narrow glass pipettes) allow for the possibility of recording electrical activity from a single neuron while the animal is awake or active. This allows Eric Kandel (Nobel Prize, 2000) to investigate how memory works at the cellular level, mostly by working with sea slugs (aplysia). It allows Hubel and Weisel (Nobel Prize, 1981) to investigate (using cats) how a certain pattern of light on the retina results in a certain pattern of activity in the brain. These studies are what are called single cell recordings. Basically a very small hollow-tipped needle is placed inside the brain of an animal (inside a single neuron, to record its activity) , then we ask the animal to think something, and observe what makes that particular neuron activate.

With humans, this was not possible. So human live brain scanning began with the EEG, which measured the brain's electrical activity. When a bunch of neurons fire, you can detect electricity by placing detectors on the scalp. This technology had a great advantage of being able to detect neuron's firing at the exact moment that they fired. The disadvantage was that it was not very good at telling where the neurons were firing, because it could only detect broad patterns of electricity, in general brain locations. In technical terms, we say EEG had high temporal resolution (very sensitive to time) but low spatial resolution (could not tell exactly where in the brain the activity was). But despite its lack of localization, EEG is still used today, and has given us much insight into how the brain is connected to the mind.

After EEG, PET scans were an advance, because they could tell with much more accuracy which areas of the brain were active. For PET to work, you need to be injected with radioactivity, which your blood then takes to your brain and makes it glow. When a neuron fires, it needs energy to keep firing (it is actually an electrical signal, which takes energy). It sends a message for more blood, then the blood (ooh, it glows!) gets sent to that area.

PET scans gave way to fMRI, which similarly used the blood flow as a marker, but this time, no radioactive marker. The MRI machine uses an enormous magnet to detect the magnetic fields of oxygen atoms in your blood. In fact, when your brain sends blood to a set of firing neurons, it sends too much. Most of this blood gets used. Some does not. The fMRI detects that extra blood that got sent, using the BOLD (or Blood Oxygen Level Dependent) response.

Despite the fact that fMRI has been in use for twenty years, and the basic principles remain the same, the methods of its use have changed drastically. For one example, consider the computational constraints of taking a scan of the brain. Instead of a 1.5-dimensional graph (on or off) over time for single cell recording, fMRI has full 3-dimensional data, thousands of thousands of voxels (volumetric pixels). As computing power has increased, as the power of the magnets has increased, this has yielded improvements to the techniques in fMRI, which are still imperfect, albeit convincing well beyond their actual scientific utility.

This logic, of observing a brain while the animal is in action, has been around for a long time, depending on whether you start with Galvani's frogs in 1771, or Helmholtz measuring the (non-zero speed) of neural transmission in 1852, or with Kandel's studies of the cellular basis of memory in the 1960's.

Ok, so now I am at the conclusion of my introduction, and it is probably not much better than going to Wikipedia (although after doing this, I started to edit the cognitive neuroscience Wikipedia entry). What was the point?

To return to the main point: cognitive neuroscience is not young. The connection between mind and brain has been a topic of experimental study and debate for at least 200 years. But this 200 years is not simply progress, one scientist taking another's discoveries and adding to them. Golgi and Cajal, despite making amazing contributions, disagreed on whether the brain was made of neurons, in their Nobel Prize acceptance speeches. I have not described developments in whether brain cells can change (neuroplasticity) but that was a lively topic of debate for some time too. If we look closely at the history of most sciences, we see that science progresses in fits and starts. In psychology and cognitive neuroscience, we can see how advances were tied to advances in technology (lenses, magnets, electricity) or advances in other fields (PET depends on safe radioactivity) or even tragedies (head injuries in WWI resulted in many observations). When we look at the history, we see that the history of cognitive neuroscience includes physics, chemistry, biology, and psychology. In other words, despite the apparent immaturity of knowledge at every level, there is still communication and influence. Returning to the exercise that brought all this about (evaluating evolutionary psychology), I am left agreeing with another commenter on TNC's blog, it is relatively pointless to assign maturity or youth to an entire subfield. We should instead consider individual claims, and evaluate the evidence for these claims, in the context of a broad background in related scientific knowledge.

Tuesday, May 31, 2011

Monday, May 30, 2011

Introduction to the History of Cognitive Neuroscience (Part 2)

Ok, so last post I described the first part of the history of methods in the cognitive neurosciences, when behavior goes wrong, then finding out what's wrong with the brain. For this section, I'll be discussing when we intentionally damage, or directly zap the brain. Before you get too creeped out, this has mostly (the mostly is important) been done on non-human animals. There are ethical issues with animal research, which have been noted and struggled with since the beginning of animal research. Marshall Hall, a pioneering researcher, laid out several principles in doing animal research (in 1831). These principles are pretty much in place now. How are they applied? For example, principle 4 ("Justifiable experiments should be carried out with the least possible infliction of suffering (often through the use of lower, less sentient animals") applied means that if we are studying individual neurons, we should study the simplest creature possible, whereas if we are studying, say, the visual system, we should study the "lowest" animal that is comparable to humans (in this case, the cat). All the while, we should make sure the animals are as comfortable as possible.

I'll mention a few more cases of changing the brain, then observing behavior. The first was an experimental surgery to relieve extreme epilepsy. It was 1953, and 27-year-old Henry Moliason was referred to a reckless doctor named William Beecher Scoville. Henry's epilepsy was localized to a structure in his brain located in the middle of his temporal lobes, on the side, tucked underneath. Scoville removed an entire section of his brain around this area, and thereby improved Henry's epilepsy. After recovery, Henry could see, hear, walk, talk, breathe, eat and drink just fine. But he needed to be institutionalized for the rest of his life. Why? Because Scoville unwittingly removed Henry's ability to make new memories. Someone could go into Henry's room, meet him, leave, wait a minute, then reenter and meet Henry again. For Henry, it would be "for the first time". Later studies showed that Henry could learn new skills, but would never remember having practiced them, so the memory damaged seemed to be only his conscious, or explicit memory.

In the past decade a new technology has allowed us to selectively "damage" human brains, but only temporarily. Imagine applying a little shot of anesthesia and paralysis to a certain small group of neurons and seeing what happens. This is exactly (ok, not exactly, but close enough) what transcranial magnetic stimulation is able to do. Zap Broca's area with a targeted magnetic field, whammo, you can't talk. For a few minutes. Then you are fine.

While Henry Moliason (or Patient HM, as he was known for most of his life in the memory literature) was a uniquely tragic, yet informative patient, for over a hundred years, cognitive neuroscientists have been changing the brains of animals and observing and recording the ensuring behavior. This has given us tremendous insight into how the mind and brain are linked, from organization of the visual system, to different memory systems, to systems of how our brains control our muscles. With the progression of technology to TMS, we are now able to very selectively "damage" human brains, and observe the associated behavior. But this is built on an increasingly detailed map of the brain, which in turn was surveyed using thousands of human and animal studies.

So, where should we begin? The first psychologist was not a psychologist at all, but a German physiologist named Gustav Fechner. Whereas Descartes thought that the brain communicated with the body through a series of tubes (that's right, Ted Stevens), Fechner demonstrated that it was electricity. Not only that, but he showed that it took time for the brain (of a frog) to communicate with its leg. It was previously thought that brain-body communication was immediate. But now, it was a very very very short amount of time. But that instant could now be measured. And Fechner, and his fellow German physiologists went about measuring the relationship between the physics in the world (like light, or sound, or electric shock) and our psychological experience of the physics. Now that each could be measured, we had a psychophysics. Fechner was born in 1801, and so most of this work was in the early and middle 1800's.

Of course, there were skeptics about the use of zapping a frog in explaining the human mind. William James himself wrote (in 1907, in On Pragmatism):

Many persons nowadays seem to think that any conclusion must be very scientific if the arguments in favor of it are derived from twitching of frogs' legs—especially if the frogs are decapitated—and that—on the other hand—any doctrine chiefly vouched for by the feelings of human beings—with heads on their shoulders—must be benighted and superstitious.But there were now neurons, and soon there would be neurons working together. Donald Hebb, and much later, Eric Kandel, began to discover the way, at the cellular level, that neurons communicate and remember. As the decades went by, different animals (from sea slugs, to cats, to, in very rare cases, monkeys) had different parts of their brains surgically changed, and their behavior was recorded. A map of the brain was beginning to emerge.

I'll mention a few more cases of changing the brain, then observing behavior. The first was an experimental surgery to relieve extreme epilepsy. It was 1953, and 27-year-old Henry Moliason was referred to a reckless doctor named William Beecher Scoville. Henry's epilepsy was localized to a structure in his brain located in the middle of his temporal lobes, on the side, tucked underneath. Scoville removed an entire section of his brain around this area, and thereby improved Henry's epilepsy. After recovery, Henry could see, hear, walk, talk, breathe, eat and drink just fine. But he needed to be institutionalized for the rest of his life. Why? Because Scoville unwittingly removed Henry's ability to make new memories. Someone could go into Henry's room, meet him, leave, wait a minute, then reenter and meet Henry again. For Henry, it would be "for the first time". Later studies showed that Henry could learn new skills, but would never remember having practiced them, so the memory damaged seemed to be only his conscious, or explicit memory.

In the past decade a new technology has allowed us to selectively "damage" human brains, but only temporarily. Imagine applying a little shot of anesthesia and paralysis to a certain small group of neurons and seeing what happens. This is exactly (ok, not exactly, but close enough) what transcranial magnetic stimulation is able to do. Zap Broca's area with a targeted magnetic field, whammo, you can't talk. For a few minutes. Then you are fine.

While Henry Moliason (or Patient HM, as he was known for most of his life in the memory literature) was a uniquely tragic, yet informative patient, for over a hundred years, cognitive neuroscientists have been changing the brains of animals and observing and recording the ensuring behavior. This has given us tremendous insight into how the mind and brain are linked, from organization of the visual system, to different memory systems, to systems of how our brains control our muscles. With the progression of technology to TMS, we are now able to very selectively "damage" human brains, and observe the associated behavior. But this is built on an increasingly detailed map of the brain, which in turn was surveyed using thousands of human and animal studies.

Introduction to the History of Cognitive Neuroscience

Recently, spurred on by the Kanazawa business, Ta-Nehisi Coates asked about Evo psych in general in one of his special "Talk to Me Like I'm Stupid" sessions. This spurred on a lively discussion (mostly piling on about how terrible it is) but led down an interesting road, when a commenter named neocortex noted that evo psych makes its claims on the connection between evolution and the brain, based on our limited understanding of the link between brain and behavior. She urged everyone to consider that cognitive neuroscience is still very young as a field, and therefore evo psych is necessarily built on a shaky foundation. Several (besides me) other people disagreed with the metaphor of "foundations" for different levels of explanation (you can explain atoms, molecules, neurons, brains, behavior). But I have continued to think about this, because some of the misconceptions shared by neocortex and others (her comment was later elevated by Coates, and commended as excellent) get at a fundamental misconception of a lot of science, but psychology is often a victim of this "we don't know anything" attitude. I think what most disturbed me was a seemingly small error in words, which is a linchpin in my argument against her point of view.

Here is the quote:

What's wrong? Functional neuroimaging is not a discipline, but a tool. The discipline she is speaking of is cognitive neuroscience, but she acts as if the tool is the discipline. We don't say that biology is only as old as the electron microscope or that physics is only as old as the supercollider, but yet this statement passes for truth, even from someone who has extensive undergraduate experience in neuroscience. Interestingly, increasing knowledge in a certain field can sometimes lead new learners to conclude that there are so many questions left as to render the current state of knowledge tiny in comparison.

I think we can understand a lot about the nature of science by studying the history of science, and distinguishing questions from tools, so here is my contribution to correcting that misconception.

The field of cognitive neuroscience is actually quite old. What is the connection between our biology and our thoughts? How does our brain create our mind? This question has been around longer than any consensus that our brain does create our mind, to the very beginning of biology itself. And scientific reasoning (however basic) has been used from the start.

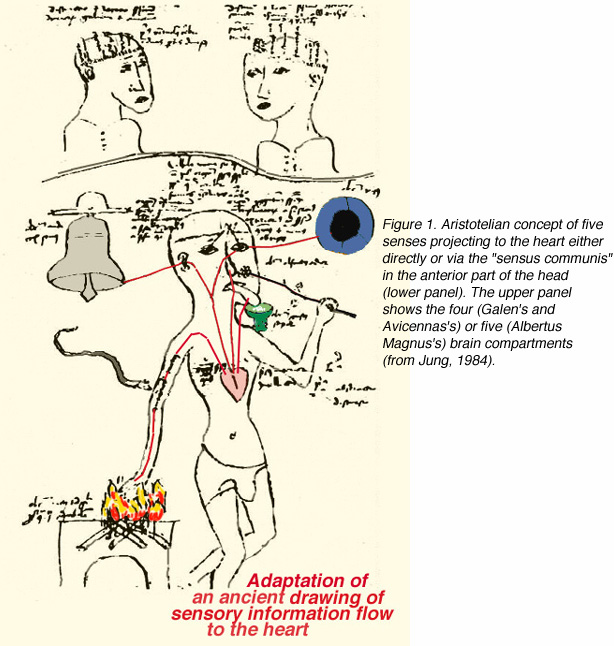

We start with Aristotle, who thought that the brain was responsible for cooling the blood, while the heart was the seat of reason. Why? One can survive a blow to the head, but not to the heart. The heart must be more important for conscious thought.

Of course, he was wrong, but his logic was impeccable, and forms one third of the logic of modern cognitive neuroscience.

There are three basic ways that cognitive neuroscience gains knowledge about the brain. This is not the only way of classifying the logic of cognitive neuroscience, but it nicely draws attention to the history of evidence in cognitive neuroscience.

1) We observe a change in behavior, due to known injury or biological disease . We classify this behavior. Then, we figure out where, and how, their brain injury (or disease) correlates to their behavior. In this case, the behavior is known first (think, memory problems in Alzheimers, or amnesia after an accident) then later the biological basis is described. I will discuss this part today, and continue with the others tomorrow.

2) A known part of the brain is either injured or stimulated (on purpose). In this case, we know the part of the brain injured (technical term: lesioned) and then we observe the corresponding behavior change.

3) A normal subject is asked to do a task. While they are doing the task, a scientist observes their brain.

We combine these techniques, inherently "connnective" with basic psychology (a pure psychological task, with psychological measure) and basic neuroscience (dissection and staining of brain cells, for example), which each can be quite insightful in cognitive neuroscience.

So, what is the history of these techniques? People have been getting whacks to the head forever, and doctors have been investigating them forever, but a few important patients form the beginning of modern cognitive neuroscience for a few reasons. First, for most of human history, weapons have been high on large blunt force, and low on damage to a specific area. Our ability to gather evidence on what brain area does what (and all of neuroscience is not only what brain _area_ does what, but more on that later) using people who have brain damage is dependent on how big that damage is. Further, the issues of drainage and infection made most head injuries fatal in the short term, if not immediately. I think you could make the case that the first important case in the history of neuroscience was as much to do with gunpowder and the germ theory of disease as anything else.

Phineas Gage (the wikipedia page on him is actually excellent) was a foreman building the railroad in Vermont. When the railroads needed to blow up a mountain, they:

1) Dug a deep hole, down to the hard rock

2) Poured some gunpowder in it and set a fuse

3) Filled the hole back up with sand

4) Tamped down the dirt with a long iron rod

5) Light the fuse

6) Run away

This is a dangerous activity. And don't skip step 3. Be very careful that you don't skip step 3, because banging an iron rod directly on gunpowder, with hard rock below, tends to make sparks. Which gunpowder likes. A LOT.

So, poor Phineas skipped step 3, was looking to the side and thought the dirt was in. And the iron pod he was tamping flew up the hole, hit him right under the chin, and flew out the top of his head, taking a big chunk of his brain with it.

But Phineas walked to the hospital, and was "ok" the next day. His personality seemed to change, and he wasn't very good at making decisions, but that was about it.

Let's stop. Do we know what the frontal lobe does? No. And this is just one brain. Just one Phineas. And we don't really know exactly what the damage was, or exactly how much his behavior changed. But it starts to give us pretty good evidence that this part of the brain (or at least that part that got blew out) isn't important for walking or talking or breathing. It establishes some boundaries.

Ok, next stop, Tan. Tan was a patient who could only say Tan. But his doctor noticed that he could also walk, and breathe. Just not talk. Tan did not have a brain injury, but a brain disease. His doctor, Paul Broca, hypothesized that his ability to produce language was disrupted, but his ability to comprehend language was spared. Tan could follow simple directions. When Tan died, Broca did an autopsy and found damage to the brain on a certain part of the left side of his cerebral cortex. Unlike Phineas, Tan was not unique. Broca was a specialist in aphasias, people who had difficulties with language. He had a large set of patients with language problems, and a lot of them who had problems producing language had damage to that area, whether by syphilis (which was Tan's disease) or by gunshot wound.

Another doctor, named Karl Wernicke, had many other patients, who seemed to have no trouble producing speech, but could not understand it. At autopsy, these patients also had damage to the left side of their cerebral cortex, but in a different place than Broca's patients.

Phineas Gage had his accident in 1848, and died 12 years later.

Tan died in 1861.

Wernicke described his group of patients in the 1870's

So, by the time the 1870's are up, we have one famous patient, and several groups of patients, all attesting to a pattern of behavior that correlates to certain kinds of brain damage. This work continues, with people having strokes, getting gunshot wounds in war, and advanced stages of certain diseases. They start to give us a picture of certain brain areas being responsible for certain tasks and behaviors. This has been going on for at least 150 years. What has happened in this time? Similar logic, but we have improved our ability to detect the damage, and describe the behavior. Detecting the damage (in chronological order), with x-rays (1895), PET scans (1961), CT scans (1972), and MRI scans (1977). The scanning technology above only describes anatomical structure (and damage), not brain activity. We'll have to wait for a little until I describe techniques to scan brain activity. Our ability to describe the behavior has also improved, with millisecond timers, or with behavioral technique such as Gazzaniga and Sperry's split brain studies, or the different kinds of standardized neurological exams.

So, what I have described above is one main class of evidence for the connection between brain and behavior. Of course, we are not "there yet" (because there is no "there" there). But at each stage, there is mounting support for a general hypothesis ("Certain areas of the brain serve highly specialized functions") as well as mounting support for individual hypotheses ("The left posterior inferior frontal gyrus is important for processing and producing grammar").

Tomorrow, the second kind of evidence: intentional brain damage or stimulation.

Here is the quote:

Disciplines like functional neuroimaging (which shows us how different thoughts and actions activate different brain regions) have only been around for a couple of decades or less

|

| Look at my beautiful brain! |

I think we can understand a lot about the nature of science by studying the history of science, and distinguishing questions from tools, so here is my contribution to correcting that misconception.

The field of cognitive neuroscience is actually quite old. What is the connection between our biology and our thoughts? How does our brain create our mind? This question has been around longer than any consensus that our brain does create our mind, to the very beginning of biology itself. And scientific reasoning (however basic) has been used from the start.

We start with Aristotle, who thought that the brain was responsible for cooling the blood, while the heart was the seat of reason. Why? One can survive a blow to the head, but not to the heart. The heart must be more important for conscious thought.

Of course, he was wrong, but his logic was impeccable, and forms one third of the logic of modern cognitive neuroscience.

There are three basic ways that cognitive neuroscience gains knowledge about the brain. This is not the only way of classifying the logic of cognitive neuroscience, but it nicely draws attention to the history of evidence in cognitive neuroscience.

1) We observe a change in behavior, due to known injury or biological disease . We classify this behavior. Then, we figure out where, and how, their brain injury (or disease) correlates to their behavior. In this case, the behavior is known first (think, memory problems in Alzheimers, or amnesia after an accident) then later the biological basis is described. I will discuss this part today, and continue with the others tomorrow.

2) A known part of the brain is either injured or stimulated (on purpose). In this case, we know the part of the brain injured (technical term: lesioned) and then we observe the corresponding behavior change.

3) A normal subject is asked to do a task. While they are doing the task, a scientist observes their brain.

We combine these techniques, inherently "connnective" with basic psychology (a pure psychological task, with psychological measure) and basic neuroscience (dissection and staining of brain cells, for example), which each can be quite insightful in cognitive neuroscience.

So, what is the history of these techniques? People have been getting whacks to the head forever, and doctors have been investigating them forever, but a few important patients form the beginning of modern cognitive neuroscience for a few reasons. First, for most of human history, weapons have been high on large blunt force, and low on damage to a specific area. Our ability to gather evidence on what brain area does what (and all of neuroscience is not only what brain _area_ does what, but more on that later) using people who have brain damage is dependent on how big that damage is. Further, the issues of drainage and infection made most head injuries fatal in the short term, if not immediately. I think you could make the case that the first important case in the history of neuroscience was as much to do with gunpowder and the germ theory of disease as anything else.

|

| The comb-over covers his brain! |

1) Dug a deep hole, down to the hard rock

2) Poured some gunpowder in it and set a fuse

3) Filled the hole back up with sand

4) Tamped down the dirt with a long iron rod

5) Light the fuse

6) Run away

This is a dangerous activity. And don't skip step 3. Be very careful that you don't skip step 3, because banging an iron rod directly on gunpowder, with hard rock below, tends to make sparks. Which gunpowder likes. A LOT.

So, poor Phineas skipped step 3, was looking to the side and thought the dirt was in. And the iron pod he was tamping flew up the hole, hit him right under the chin, and flew out the top of his head, taking a big chunk of his brain with it.

But Phineas walked to the hospital, and was "ok" the next day. His personality seemed to change, and he wasn't very good at making decisions, but that was about it.

Let's stop. Do we know what the frontal lobe does? No. And this is just one brain. Just one Phineas. And we don't really know exactly what the damage was, or exactly how much his behavior changed. But it starts to give us pretty good evidence that this part of the brain (or at least that part that got blew out) isn't important for walking or talking or breathing. It establishes some boundaries.

Ok, next stop, Tan. Tan was a patient who could only say Tan. But his doctor noticed that he could also walk, and breathe. Just not talk. Tan did not have a brain injury, but a brain disease. His doctor, Paul Broca, hypothesized that his ability to produce language was disrupted, but his ability to comprehend language was spared. Tan could follow simple directions. When Tan died, Broca did an autopsy and found damage to the brain on a certain part of the left side of his cerebral cortex. Unlike Phineas, Tan was not unique. Broca was a specialist in aphasias, people who had difficulties with language. He had a large set of patients with language problems, and a lot of them who had problems producing language had damage to that area, whether by syphilis (which was Tan's disease) or by gunshot wound.

Another doctor, named Karl Wernicke, had many other patients, who seemed to have no trouble producing speech, but could not understand it. At autopsy, these patients also had damage to the left side of their cerebral cortex, but in a different place than Broca's patients.

Phineas Gage had his accident in 1848, and died 12 years later.

Tan died in 1861.

Wernicke described his group of patients in the 1870's

So, by the time the 1870's are up, we have one famous patient, and several groups of patients, all attesting to a pattern of behavior that correlates to certain kinds of brain damage. This work continues, with people having strokes, getting gunshot wounds in war, and advanced stages of certain diseases. They start to give us a picture of certain brain areas being responsible for certain tasks and behaviors. This has been going on for at least 150 years. What has happened in this time? Similar logic, but we have improved our ability to detect the damage, and describe the behavior. Detecting the damage (in chronological order), with x-rays (1895), PET scans (1961), CT scans (1972), and MRI scans (1977). The scanning technology above only describes anatomical structure (and damage), not brain activity. We'll have to wait for a little until I describe techniques to scan brain activity. Our ability to describe the behavior has also improved, with millisecond timers, or with behavioral technique such as Gazzaniga and Sperry's split brain studies, or the different kinds of standardized neurological exams.

So, what I have described above is one main class of evidence for the connection between brain and behavior. Of course, we are not "there yet" (because there is no "there" there). But at each stage, there is mounting support for a general hypothesis ("Certain areas of the brain serve highly specialized functions") as well as mounting support for individual hypotheses ("The left posterior inferior frontal gyrus is important for processing and producing grammar").

Tomorrow, the second kind of evidence: intentional brain damage or stimulation.

Wednesday, May 25, 2011

Spatial Navigation for MightBeLying

Over at TNC's blog, there was a big discussion about evolutionary psych, which bled into me defending cognitive psych as a real science not dependent on neuroscience. I volunteered to try to explain the links between cognition and neuroscience for any given topic. Here is my response to the first brave soul to shout: spatial navigation:

Ok, first, with a little bit of an intro.

Ok, first, with a little bit of an intro.

Insight into how the mind and brain work, and how our conscious experience and behavior map onto the biology can be found in several main ways:

1) Extirpation: Damaging the brain of an animal and seeing what happens. This has been happening for a very long time. Rats do spatial navigation. Rats have brains. Understanding an animal model can help. Of course, you have to map the rat brain to the human brain, which is tricky. But not impossible.

2) Clinical method: Someone gets brain damage, then we figure out how it affects their behavior, and relate it to the brain damage. In general, relating behavior, or experience, to some sort of activity (or lack of activity) in the brain. Some fMRI falls into this category.

3) Electrical stimulation: Again, mostly with animals, but not entirely. Direct stimulation of the brain, then seeing what happens to behavior.

Let's start back with the demise of Skinnerian behaviorism. Skinner and his adherents did rely on rats to do many experiments, but they believed that rats learn responses to stimuli, nothing else. In other words, there is no need to talk about spatial navigation in the rat (or in the human), there is only a set of responses to a set of environmental situations.

Edward Tolman, who investigated rats in mazes, started coming up with evidence that rats have mental maps. This was kind of a big deal (in 1948). It means that we have to investigate what the shapes of those maps are, and we have to open the black box of the brain. … and a lot of stuff happens, and we now have models where a certain kind of damage to the rat hippocampus, in a certain place, lead to a certain pattern of them being lost, or forgetting, or being unable to learn new mazes.

So, given that rats have maps in the brain, of course we do too. So what kinds of maps do we have in our brain? How does the software relate to the hardware?

Well, one snarky way of summarizing 50 years of cognitive psychology research is that rats are smart and people are dumb. So, we suck at spatial navigation, especially compared to a lot of our animal cousins. We navigate with vision, not with smell, like salmon, or super-vision, like desert ants (not to be confused with dessert ants). And the way that we navigate with vision (and memory) utilizes shortcuts and biases. We regularize, impose a pattern when there isn’t one, make things line up north south, or east west. Many people think that Reno is east of San Diego (it isn’t) because we don’t really have an accurate map in our heads, we have a map biased by some easy to remember rules (California is west of Nevada).

Ok, the neuroscience side: hippocampus seems important. You mess with rat hippocampus, you mess with their navigation. With brain scanning, we can look at people’s hippocampi. One famous study imaged the brains of London cab drivers at different stages of their careers. The amount of experience was related to the amount of knowledge, was related to the size of a particular part of their hippocampus.

Of course, spatial navigation can't be understood in a vacuum, because it involves perception and memory (at least), both in behavior, as well as networks of brain areas. It is not quite right to say that memory (or spatial navigation) happens in the hippocampus, and that perception happens in the back of your brain (occipital cortex), but it isn’t as wrong as the phrenology of the past. We are not, for example, going to realize that we’ve been totally wrong, and that vision happens in the front of the brain, not the back, and that language areas are actually buried under everything in the midbrain. But we may discover that the way we have been thinking about the hippocampus is slightly wrong, in that it is a critical part of the memory circuit, not the place where memory happens.

Or you could read people who know much more than me, put it in a much better, clearer, and more organized fashion:

http://www-psych.stanford.edu/~bt/space/index.html">Barbara Tversky at Stanford. This is a http://www-psych.stanford.edu/~bt/space/papers/levelsstructure.pdf">

more comprehensible paper for the layperson, at least the first few pages.

more comprehensible paper for the layperson, at least the first few pages.

http://sitemason.vanderbilt.edu/mcnamaralab/mcnamaralab/currentprojects">Tim McNamara at Vanderbilt:

Or do some of the reading for this course : http://cogs200.pbworks.com/w/page/10991738/FrontPage

Just taking a look at this course should tell you that, within one course, you can see the work being done from the level of neurochemistry within neurons, to single neurons, to brain structures, to behavior. All complementing each other, filling in gaps, mutually dependent.

Ok, that was not very well organized, but it gives a taste of what the research on spatial navigation looks like. It involves cells, brain areas, brains, bees, salmon, rats, London cabbies and regular people. Not all of it converges on a great explanation for how exactly spatial navigation works in all brains, or even in our brain. But we know more than we did twenty years ago.

Tuesday, May 17, 2011

Some thoughts about educational games for the iPad

One of the reasons I treated myself to an iPad last year was that I saw great potential for educational (and fun) games for kids. I was hoping we could further delay getting a Wii or DS or other console, and nudge the kids into some educational games to boot.

I have been pleased so far, but of course the games are mixed. I thought I would share some thoughts about different kinds (and qualities) of educational games, using a few examples as case studies.

My favorite pair of educational games for the iPad is the Stack the States (and Countries) games.

The game works like this: You answer trivia questions about the states, when you get an answer right, you get to stack the states. Once you reach a certain line, you are awarded a state on your map. The gameplay is a simple physics/puzzle game, where you have to figure out how the shapes fit together, and balance them to reach the line.

Why am I a fan? First, it is pretty fun. The physics-based puzzle gameplay is challenging in a simple video game way.

The second reason that I like it is that it does a good job mapping the relevant dimensions of gameplay map onto good educational dimensions. What the heck does that mean? Instead of just being a glorified trivia game, where you get points for answering questions right, the stacking task integrates relevant state facts into the game itself. In this specific case, you naturally learn the shapes and sizes of the states as you do the stacking. I bet a few hours of playing this game, and kids could do a pretty good job sorting states from smallest to biggest, without even trying to memorize this.

For improvement, I would love a difficulty setting for the trivia questions, which are fairly limited right now. But for a 3.00 purchase, I have gotten more than my money's worth. I highly recommend it.

Another game, which don't like as much, but still ok, (and is typical educational software fare) is called Math Ninja.

This is a very simple arcade shoot-em up, where you answer math questions (addition, subtraction, multiplication, division) in between rounds of shooting evil robot cats and dogs. This game follows the model of bribing kids to do math drills by interpersing them with a video game. I am not totally against this approach (and this game is a pretty good execution, you don't just get points, but you unlock weapons by answering more questions quickly). Sometimes you just need to practice, and drill, and math facts are a likely candidate. I am ok with bribing my kids to memorize the times tables just so long as that doesn't become how they think of all math.

Anyways, those are a few quick thoughts. Any other iPad educational game recommendations out there? The boys have discovered the periodic table of elements, thanks to They Might Be Giants. I wonder if there is a game to be made from that?

I have been pleased so far, but of course the games are mixed. I thought I would share some thoughts about different kinds (and qualities) of educational games, using a few examples as case studies.

My favorite pair of educational games for the iPad is the Stack the States (and Countries) games.

The game works like this: You answer trivia questions about the states, when you get an answer right, you get to stack the states. Once you reach a certain line, you are awarded a state on your map. The gameplay is a simple physics/puzzle game, where you have to figure out how the shapes fit together, and balance them to reach the line.

Why am I a fan? First, it is pretty fun. The physics-based puzzle gameplay is challenging in a simple video game way.

The second reason that I like it is that it does a good job mapping the relevant dimensions of gameplay map onto good educational dimensions. What the heck does that mean? Instead of just being a glorified trivia game, where you get points for answering questions right, the stacking task integrates relevant state facts into the game itself. In this specific case, you naturally learn the shapes and sizes of the states as you do the stacking. I bet a few hours of playing this game, and kids could do a pretty good job sorting states from smallest to biggest, without even trying to memorize this.

For improvement, I would love a difficulty setting for the trivia questions, which are fairly limited right now. But for a 3.00 purchase, I have gotten more than my money's worth. I highly recommend it.

Another game, which don't like as much, but still ok, (and is typical educational software fare) is called Math Ninja.

This is a very simple arcade shoot-em up, where you answer math questions (addition, subtraction, multiplication, division) in between rounds of shooting evil robot cats and dogs. This game follows the model of bribing kids to do math drills by interpersing them with a video game. I am not totally against this approach (and this game is a pretty good execution, you don't just get points, but you unlock weapons by answering more questions quickly). Sometimes you just need to practice, and drill, and math facts are a likely candidate. I am ok with bribing my kids to memorize the times tables just so long as that doesn't become how they think of all math.

Anyways, those are a few quick thoughts. Any other iPad educational game recommendations out there? The boys have discovered the periodic table of elements, thanks to They Might Be Giants. I wonder if there is a game to be made from that?

Subscribe to:

Posts (Atom)